Common Video Streaming Errors & How to Fix Them

A streaming engineer once told us about the night their app suddenly went viral. Everything looked perfectly calm… right up until the traffic graph quietly turned vertical. Within minutes, half the audience was staring at buffering wheels, the kind that spin just slowly enough to make everyone wonder if their Wi-Fi is plotting against them. A few viewers dropped to 144p, some froze entirely, and the team had that universal engineering moment: “This was working five minutes ago.”

Nothing shakes user trust faster than a stream breaking at the exact moment people care the most.

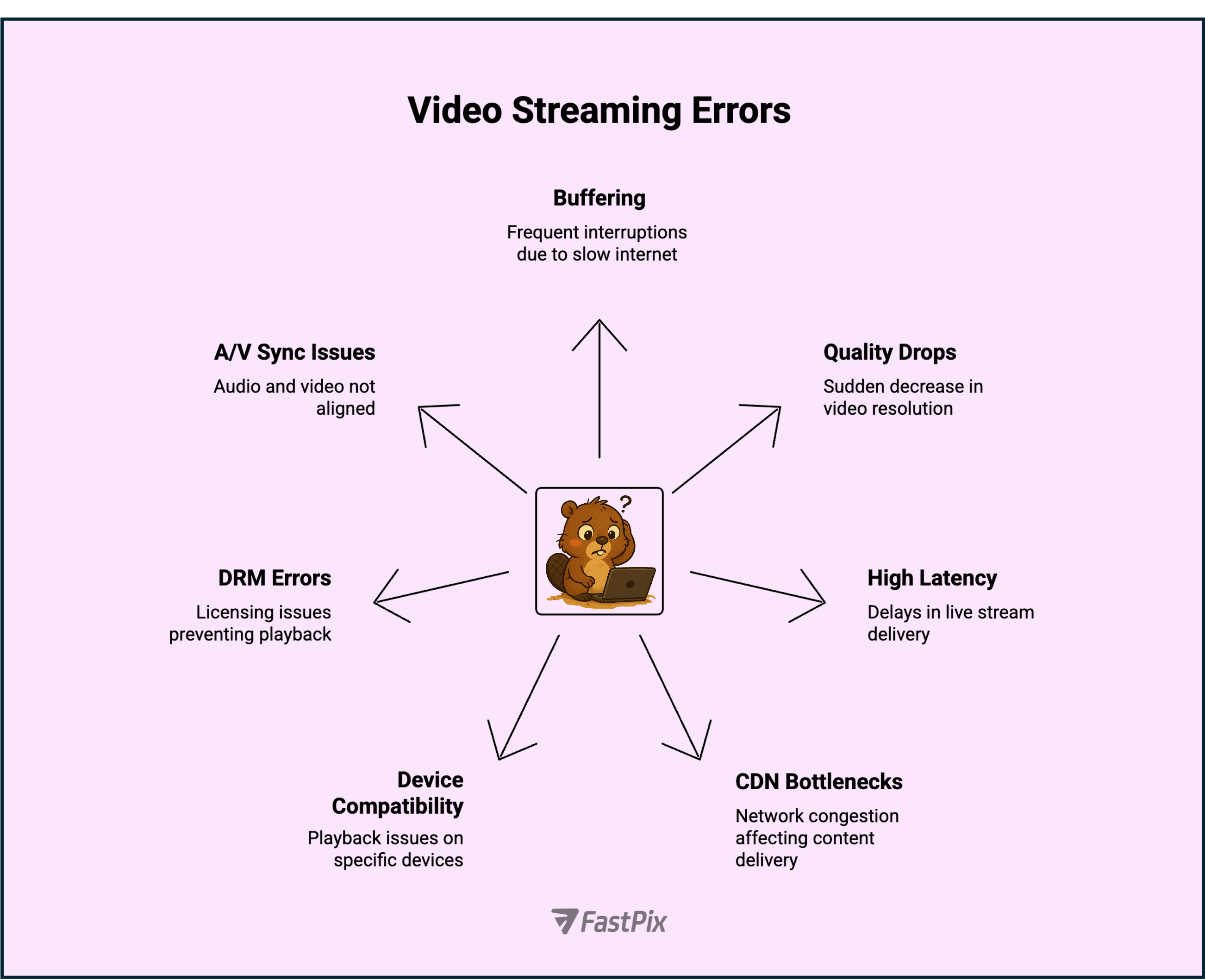

The truth is issues like buffering, stuttering, random resolution drops, A/V mismatch, DRM warnings, or streams that politely refuse to load aren’t mysterious. They stem from predictable gaps, in encoding, delivery, caching, device compatibility, or the player’s logic quietly hitting a corner case.

And heading into 2026, the margin for error is thinner than ever. Viewers expect smooth 1080p and 4K even on trains, elevators, and mobile networks that would struggle to load a PDF. One small hiccup, and the back button becomes the escape hatch.

From what we see at FastPix, these breakdowns rarely come from “bad internet”.

They come from avoidable engineering issues hidden somewhere along the pipeline.

In this guide, we’ll walk through the 7 most common video streaming errors developers face, why they happen, and the fixes that actually make a difference.

Most streaming issues begin long before the buffering wheel appears. A stream only breaks when the video pipeline can’t deliver data fast enough for real-time playback, and it doesn’t take much to fall behind. A slightly oversized segment, a slow CDN edge, or a player that reacts a second too late can turn a perfectly encoded video into a frustrating experience.

Every stream goes through the same journey: it’s encoded, packaged, distributed across CDNs, and finally decoded on a device that may or may not fully support the chosen codec or format. If even one link in that chain slows down, the viewer feels it instantly. A poorly tuned bitrate ladder overwhelms weak networks, a cache miss forces a round trip to the origin, and an incompatible codec causes devices to silently drop frames. The symptom looks like “internet trouble”, but the root cause is usually upstream.

Imagine a viewer watching a clip on 4G during their commute. They move between towers, the signal dips, and the player has one job: adapt instantly. If your CDN hasn’t cached the content nearby, or if your ABR ladder can’t downshift smoothly, the buffer drains and playback stops. To the viewer, your app failed, even if the network only fluctuated for a few seconds.

The video freezes, but the issue might be a cold edge. Devices crash, but the trigger might be a single oversized segment. Once you understand how tightly encoding, delivery, caching, and playback depend on one another, diagnosing and fixing these issues becomes far more straightforward.

Now, let’s dive into the 7 most common video streaming errors and how to fix them.

Buffering happens when the player can’t fetch video segments fast enough to stay ahead of playback. This usually starts upstream: segments that are too heavy, bitrate ladders that don’t adapt smoothly, or CDN edges that don’t have the content cached close to the viewer.

Picture someone watching a clip on 4G. The network dips for a moment totally normal but if your segments are long or your ladder jumps from 6 Mbps to 2 Mbps with no middle options, the player reacts too slowly. The buffer drains, playback freezes, and the user instantly blames your app.

How to fix it:

Use modern codecs like H.265 or AV1 to reduce segment size. Build more granular bitrate ladders so the player can downshift smoothly instead of jumping across large gaps. Keep segments short (around 2–4 seconds) to let the player react faster to network changes, and ensure your CDN keeps popular content cached at the closest edges. Together, these tweaks significantly reduce mid-stream freezes on unstable networks.

Quality drops happen when the player can’t sustain the bitrate required for the current resolution. Instead of buffering, it shifts down to the lowest possible quality to keep the stream alive. This is why viewers sometimes jump from crisp 1080p to a blurry 144p within seconds, even on connections that seem “okay.”

A big reason this happens is an ABR ladder that isn’t tuned for real-world networks. If there aren’t enough mid-tier bitrates, the player has no smooth way to step down when bandwidth dips. Codec choices matter too, older codecs like H.264 require more bandwidth for the same quality, so even small fluctuations push the player into low resolutions. And if your ingestion or CDN delivery is slow, the player interprets it as “low throughput” and automatically switches to the lowest quality to avoid stalling.

Think of someone watching a 1080p stream in a crowded café. The Wi-Fi momentarily congests, but instead of gently shifting to 720p, the player drops all the way to 144p because your ladder doesn’t offer enough intermediate steps. The viewer assumes your platform “can’t handle HD,” even though the issue was just missing rungs on the ladder.

How to fix it:

Use more granular bitrate ladders with smoother steps between qualities, especially in the 300–1500 kbps range. Adopt modern codecs like H.265 or AV1 to deliver higher quality at lower bitrates. And monitor real throughput rather than just peak capacity, the goal is to keep the player in a stable, adaptive range rather than oscillating between extremes.

Latency becomes a problem when a live stream falls several seconds or even tens of seconds, behind real time. Viewers notice it most during sports, gaming, auctions, live shopping, or anything interactive. The moment the delay crosses a threshold, the stream stops feeling “live.”

Most latency issues start with the ingest and transcoding pipeline. Traditional protocols like RTMP introduce extra delay, and if your system transcodes sequentially or uses long GOP structures, you’re adding unnecessary seconds before the video even reaches the CDN. CDN hops add more delay, especially when the stream travels through distant or overloaded edges. And if your player or server uses larger segment durations, the latency stacks up quickly.

A typical example: during a live cricket match, one viewer hears a cheer from their neighbour five seconds before seeing the boundary on their own screen simply because their stream is stuck behind long segments, slow transcoding, or non-optimized routing. The connection may be fine; the workflow isn’t.

How to fix it:

Use low-latency protocols like SRT, LL-HLS, or WebRTC to reduce the gap from camera to viewer. Shorten segment duration to 2–3 seconds or use partial segments for LL-HLS. Optimize your ingest pipeline so transcoding happens in parallel, and route streams through CDNs with closer, well-distributed edges. With the right setup, glass-to-glass latency can drop dramatically without sacrificing stability.

When a stream stutters or takes forever to start, the issue often isn’t the video or the player, it’s the CDN. A CDN’s job is to serve video from the closest edge location so the viewer receives each segment quickly. But when that edge server doesn’t have the segment cached, the request travels all the way back to the origin. That extra round trip is enough to cause slow startup, mid-stream stalls, or choppy playback.

Cache misses happen for a few reasons: the content might be new or recently purged, the CDN may not have strong coverage in certain regions, or your caching rules may be too aggressive. During traffic spikes, overloaded edge nodes also struggle to keep up, which leads to inconsistent performance across different geographies, even if your system seems healthy elsewhere.

Imagine launching a trailer that goes viral in Southeast Asia. If your CDN hasn’t pre-warmed caches or doesn’t have well-distributed edges in the region, the first wave of viewers will unknowingly pull content from your origin. The result is slow loading for them and unnecessary load on your infrastructure.

How to fix it:

Use a multi-CDN approach to route traffic to the fastest network in each region. Set sensible TTLs so frequently watched content stays warm at the edge, and pre-warm assets you expect to spike. Monitor cache hit ratios as a core metric, high hits mean fast playback, while dips often signal issues before users notice.

When a video loads perfectly on one device but refuses to play on another, the root cause is almost always a codec or format mismatch. Different browsers, OS versions, and hardware have wildly different levels of support for formats like H.265, AV1, VP9, or containers like MP4, WebM, and HLS. If your workflow only generates a single format or relies heavily on newer codecs, some devices simply can’t decode the stream.

This usually shows up as:

A common example: many older Android devices can’t decode HEVC reliably, while some desktop browsers don’t support WebM or VP9. If your player doesn’t have a fallback path, the viewer hits a dead end not because your stream is broken, but because their device can’t understand it.

How to fix it:

Support multiple codecs and formats, especially a safe fallback like H.264 + MP4/HLS. Let the player perform capability detection and automatically switch to a supported format when needed. And make sure your encoding pipeline outputs at least one universal format for older devices. This ensures that no viewer is blocked simply because their hardware or browser is behind.

DRM errors are one of the most frustrating failures because everything looks like it should work, the player loads, the URL is valid, but the video refuses to start. These issues happen when the DRM system (Widevine, FairPlay, or PlayReady) can’t validate a license or decrypt content on the viewer’s device. To the user, it usually appears as “Playback failed” or a silent freeze.

Most DRM failures come from mismatched configurations: expired licenses, incorrect token signatures, unsupported device-level DRM, or differences in how browsers request keys. A token generated even a few seconds off from the server clock can reject a license. And on older devices, Widevine L3 may behave inconsistently, causing legitimate viewers to be blocked.

A real-world example: a user tries to watch a paid webinar. Their subscription is active, their internet is fine, but the license server sees an expired token or a wrong device ID. The result? Instant denial. From the viewer’s perspective, the platform “doesn’t work,” even though the content is perfectly valid behind the scenes.

How to fix it:

Use a centralized license and token management system so keys never expire mid-session. Validate time sync across servers, standardize token generation logic, and ensure your player shows clear error messages instead of generic failures. Test DRM across multiple devices and browsers especially older Androids and Smart TVs to catch compatibility issues early.

When the audio leads or lags behind the video, viewers notice it instantly and it breaks immersion faster than almost any other error. A/V sync issues usually appear during fast-paced content like sports, fitness classes, gaming, or talk shows where lip-sync matters. Even a 200–300 ms drift feels “wrong.”

These issues often start during encoding. If audio and video tracks aren’t aligned with consistent timestamps, the player struggles to render them together. Frame rate mismatches, variable frame rate sources, or poorly tuned GOP structures can all introduce tiny timing offsets that grow over the duration of the video. In live streams, network fluctuations or jitter amplify this drift, especially if the player lacks logic to correct timing deviations on the fly.

A common example is a viewer watching a live workout session. The instructor says “jump” but the visual cue comes half a second later. Nothing technically “breaks,” but the user experience suffers, and engagement drops quickly because the content no longer feels synchronized or usable.

How to fix it:

Ensure precise timestamp alignment during encoding and use consistent frame rates across all renditions. For live streaming, enable player-side drift correction so audio and video can re-sync dynamically during temporary network instability. And avoid mixing variable and constant frame rate sources in the same workflow, this is one of the easiest ways to prevent long-form sync drift.

Every streaming error you just read about buffering, quality drops, latency, CDN misses, device failures, DRM errors, and A/V sync drift comes from one root problem: fragmented workflows. Encoding is handled in one place, delivery in another, DRM somewhere else, and the player struggles to stitch it all together.

FastPix fixes this by giving you a unified video pipeline where encoding, delivery, caching, playback, latency optimization, and DRM all work together instead of fighting each other.

Here’s how FastPix eliminates each failure point in practice:

No more buffering or stalls

FastPix encodes every video using modern codecs (H.265 and AV1) and builds intelligent per-title bitrate ladders with smaller segment sizes. Pair that with multi-CDN routing and warm edge caching, and the player almost never runs out of data, even during network dips.

Quality stays stable, even on bad networks

Instead of jumping from HD to 144p, the FastPix player uses smoother ABR steps and real throughput detection. It downshifts early, recovers quickly, and avoids aggressive oscillations.

Live streams run with ultra-low latency

FastPix uses SRT, LL-HLS, parallel live transcoding, and short GOPs so the delay stays consistently low. Sports, gaming, auctions, and live shopping remain in sync with real time.

CDN bottlenecks disappear

The multi-CDN engine chooses the fastest path for each viewer automatically. Cache-hit ratios stay high, edges stay warm, and routes reroute instantly if one CDN slows down.

Every device can play the video

FastPix outputs H.264, H.265, AV1, MP4, HLS, and DASH covering old Androids, new iPhones, older smart TVs, and modern browsers. The player auto-detects capability and switches formats seamlessly.

DRM “Playback Failed” errors drop to near-zero

With centralized token management, correct timestamping, and Widevine/ FairPlay/PlayReady handled by one system, FastPix DRM prevents silent license failures that block viewers.

Audio and video stay perfectly in sync.

FastPix aligns timestamps at the encoder and uses player-side drift correction so network jitter doesn’t cause A/V drift even during long sessions.

FastPix replaces the patchwork of tools that normally handle streaming with a single workflow designed for stability at scale, whether you're delivering a classroom lecture, a global sports stream, or a short-video feed.

We’d love to hear how you’re handling streaming issues in your product. Drop us a note, your feedback helps us improve FastPix for builders like you. If you want to try these fixes yourself, you can sign up and get started in minutes.

Buffering often comes from oversized segments, poorly tuned bitrate ladders, or CDN cache misses rather than weak networks. Even with strong bandwidth, if the player can’t download segments fast enough due to upstream inefficiencies, playback stalls.

Quality drops when the ABR ladder lacks enough mid-tier bitrates or when the codec requires more bandwidth than the network can provide. The player aggressively downshifts to avoid stalling, resulting in sudden drops to very low quality.

Streaming services often face buffering, latency, and video quality fluctuations due to network issues. Content restrictions from licensing can limit access, and high data usage may be a concern for users with limited plans.

Stream processing faces challenges like scalability, fault tolerance, and event ordering. Additionally, cost optimization and maintaining low latency are key concerns.