How to build a short video platform with Node.js

Let’s say you’ve been asked to build something like TikTok, Instagram. Or maybe it’s an education platform with short video lessons. Either way, people need to upload videos. Other people need to watch them. And you need to make it all work.

If you're using Node.js, it’s a solid choice for building APIs, managing queues, and connecting services.

But here’s the part that’s usually missing from the “just build it” conversation:

video is its own system.

We’ll walk you through how short video apps actually work, not just frontend logic, but the full backend pipeline:

You’ll learn:

By the end, you’ll have a clear picture of the full stack, and a much faster way to get it working in production.

A short video app has a lot going on under the hood.

Yes, you’ll use Node.js for the backend. But beyond routing and databases, here’s what you’re really signing up to support:

This is the baseline.

Some apps start with just uploads and playback. But most of these building blocks show up sooner than you think. Understanding the full scope early means you won’t have to rip it apart later.

Short video apps aren’t just “upload and play.”

Your backend needs to do a lot more, and Node.js is a solid fit for wiring it all together.

Here’s what your backend is responsible for, and which open source tools can make that faster to build:

Your Node.js backend becomes the glue coordinating APIs, queues, feeds, and data.

And with these open source tools, you avoid rebuilding the basics from scratch.

There are two ways to approach video infrastructure:

Option 1: Stitch everything together yourself, FFmpeg for encoding, Shaka Packager for HLS, NGINX for delivery, secure token logic, OpenTelemetry for metrics, plus your own SDKs and retries for uploads.

Option 2: Use a video API that handles those layers for you.

FastPix fits into the second path. It doesn’t replace your backend, it simplifies the parts that are deeply video-specific and time-consuming to build from scratch.

Here’s where FastPix helps:

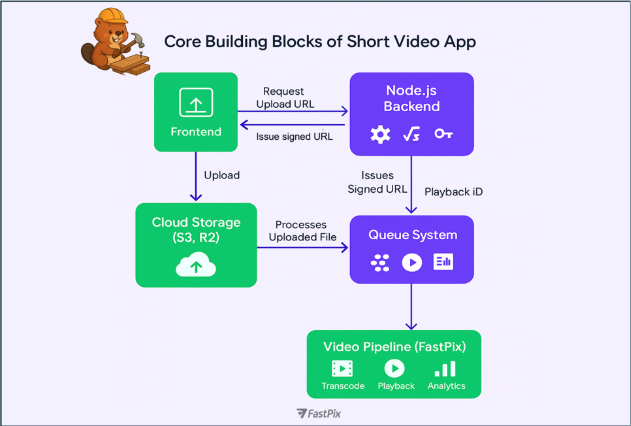

Think of your architecture in three parts:

Now if you still want to build it all yourself, we’ve covered that too in the next section.

Let’s say you decide to build the video stack yourself, no video API, no managed service.

Here’s what that means in practice:

First, you’ll need to accept large video uploads from mobile devices. These uploads will vary in size, duration, and format. They might time out. You’ll have to deal with retries, file corruption, and edge-case bugs from slow networks or older browsers.

Once the file reaches your server, you’ll need to process it. That usually means running ffmpeg to transcode the video into multiple resolutions 1080p, 720p, 480p so you can support adaptive playback. But encoding isn’t lightweight: it’s CPU-intensive, especially if several users upload videos at once. You'll likely need a queue system like BullMQ just to keep things from falling over.

Then there’s packaging. Adaptive streaming protocols like HLS or DASH require segmenting the video into tiny chunks, usually 2–6 seconds each, and generating manifest files that tell players how to switch between bitrates. You’ll need to store and serve these files correctly, and deal with CDN caching rules to make sure playback actually works on every device.

You also need to secure playback. That means writing your own token logic: signed URLs with expiration windows, referrer validation, and possibly user-agent restrictions so the video can’t be ripped and shared freely.

Now you’ll need a player. Most teams grab a JavaScript player like Hls.js, Video.js or Shaka Player, but integrating it across web, iOS, and Android takes time and each one has quirks. Safari handles HLS differently than Chrome. Android’s WebView may behave differently than Chrome on Android.

And then there’s observability. You’ll want to track how the video is performing: did it buffer? Did the user finish it? Did they drop off after 3 seconds? You’ll need to build those metrics pipelines and dashboards yourself or run a third-party analytics tool alongside your stack.

Finally: scaling. What happens when 100 people upload videos at once? What happens if your encoding queue gets backed up? What if you serve 10,000 videos tomorrow and your S3 bill triples overnight?

These are solvable problems but they require infrastructure, monitoring, and time. And they’ll take focus away from everything else you're building.

You’ve got plenty to focus on already auth, feeds, personalization. So unless you really want to maintain a transcoding pipeline and HLS origin setup, it probably makes sense to hand off the video part.

That’s where the FastPix Node.js SDK comes in. It gives you just enough control to keep everything integrated into your backend, without pulling you into infrastructure land.

Instead of writing custom fetch calls or juggling payloads, the SDK gives you a simple interface for core video actions:

npm install @fastpix/fastpix-node

1const FastPix = require("@fastpix/fastpix-node").default;

2

3const fastpix = new FastPix({

4 accessTokenId: process.env.FASTPIX_TOKEN_ID,

5 secretKey: process.env.FASTPIX_SECRET_KEY,

6});

To ingest a video:

1const response = await fastpix.uploadMediaFromUrl({

2 inputs: [{ type: "video", url: fileUrl }],

3 metadata: { title: "My test upload" },

4 accessPolicy: "public", // or "signed"

5});

To get a playback ID:

1const playback = await fastpix.mediaPlaybackIds.create(response.data.id, {

2 accessPolicy: "public",

3});

From there, just store videoId and playback_id in your DB and return them in your /feed API.

The same SDK lets you manage live streams too:

You still handle routing and UI, FastPix takes care of ingest, transcoding, and delivery.

To understand the process in more detailed go through our docs and guide

Your app stays fully in control. You still build:

FastPix just replaces the part where you’d otherwise:

It’s still your app, just with the video part abstracted into a clean SDK that fits the Node.js ecosystem.

Note: And while this guide uses Node.js, FastPix also supports SDKs for other languages, Python, Go, PHP, Ruby, C#, and more so you can build in the stack that works best for you.

The flow: upload → process → playback

Here’s what the video flow looks like when you use FastPix from the moment a user records a video to when it shows up in someone else’s feed:

1. Frontend (Web or Mobile): A user selects or records a video. The app hits your backend for an upload token.

2. Node.js Backend: Your /upload-token route returns a presigned URL or sets up a direct upload using the FastPix Upload SDK, which handles chunked uploads, retries, and edge cases on mobile.

3. Storage: The video uploads directly to your cloud storage (like S3 or R2). Once done, your background worker picks it up.

4. FastPix Ingest: Using the FastPix Node.js SDK, your backend sends the file URL to FastPix.

FastPix takes care of:

5. Processing status: You can either poll for status or receive a webhook from FastPix when processing is complete.

6. Playback ready: Your backend fetches the playbackId and stores it alongside the video’s metadata in your database.

7. Feed delivery: Your /feed route returns a list of videos, each with a playbackId, sorted however you like (recency, trending, etc.).

8. Playback: On the frontend, videos can be played using:

When building a short video app, this is the part no one warns you about.

User uploads = risk.

Some videos take off before anyone’s had a chance to review them. That’s the risk with UGC when content moves fast, moderation has to move faster.

FastPix lets you build that into your flow. Every upload is scanned during processing. You get a confidence score for NSFW content, and can trigger a webhook if something needs a second look before it reaches your audience. Go through the guides to understand it better.

But moderation is only one part of the transformation story.

You can also add outros when users download a clip. Watermark videos before they go live. Auto-generate GIFs for your feed. Replace audio, subtitles, trim highlights, all while the video is being processed. To know more on what else you can transform, go through our features section.

Most UGC platforms want to catch risky content before it spreads too fast. If a video starts gaining traction unusually quickly, it’s often flagged for human review, just to make sure it’s safe before reaching a larger audience.

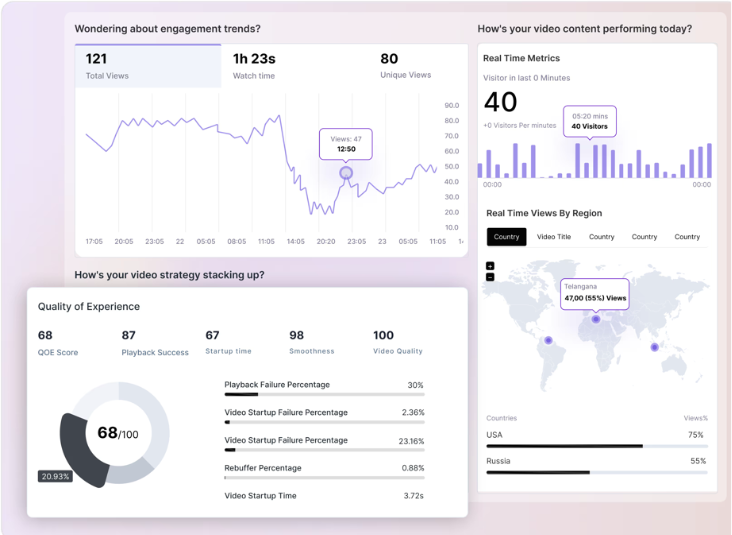

With the FastPix Video Data SDK, you can build that logic directly into your app.

Track view spikes, drop-offs, completions, and playback patterns, then route specific videos for moderation based on real-time data. No need to wire up custom events or bolt on third-party trackers.

It’s all built in, and fully customizable to your platform’s trust and safety needs.

You’ve got the APIs, the database, the background jobs, the core app is yours. But video? That’s a whole different system. FastPix gives you the full pipeline uploads, encoding, playback, analytics all in one SDK.

If you want to see how it fits into your stack, sign up and try it out. Or talk to us we’re happy to help.